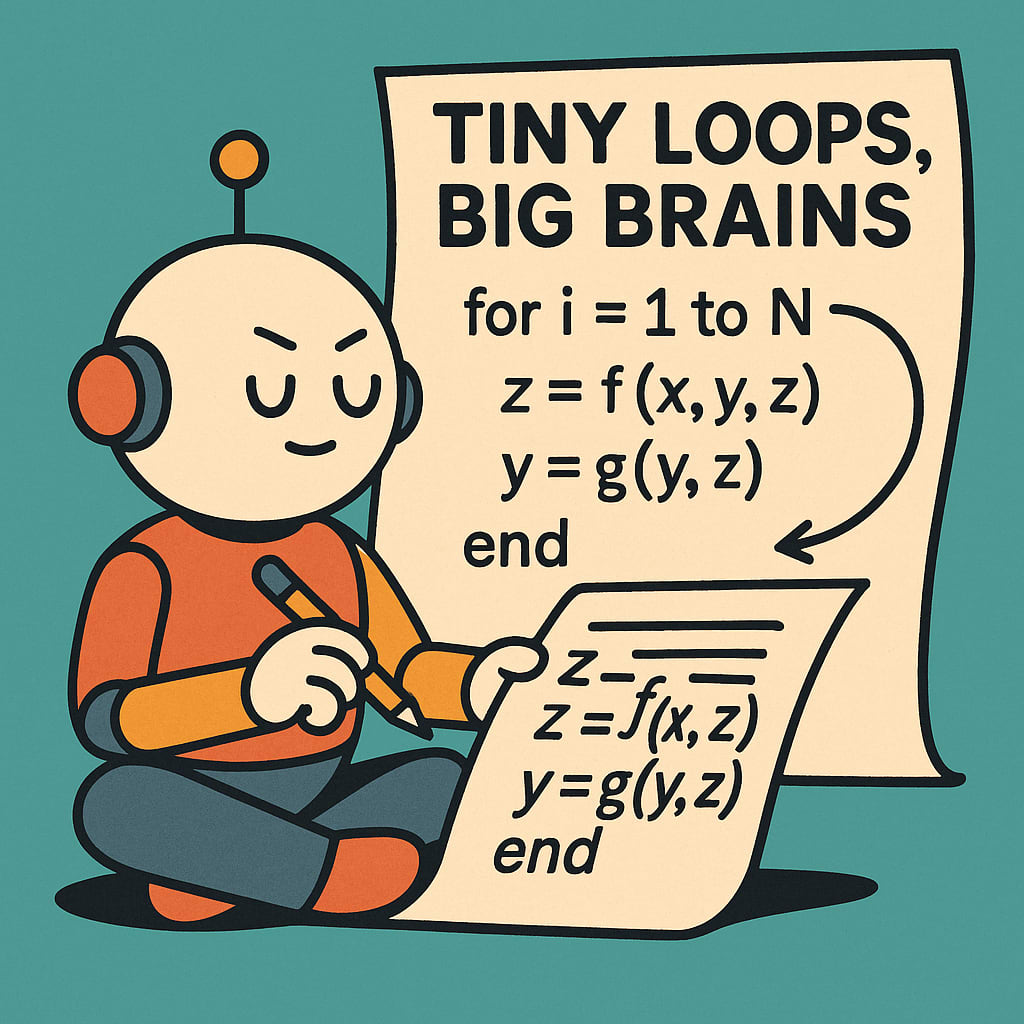

The Sequence AI of the Week $737: Tiny Loops, Big Brains: Inside Samsung's Small Model that has Taken the AI World By Storm | By The Digital Insider

Tiny recursion models are one of the most innovative AI techniques that shows the potential of small models.

There’s a quiet but important trend in reasoning research: instead of making models bigger, we’re making them loop. The idea is refreshingly simple. Take a tiny network—on the order of a few million parameters—give it a little working memory, and let it run itself multiple times on the same input, refining an evolving guess until it converges on a solution. Call this family Tiny Recursion Models (TRMs).

If you’ve ever solved Sudoku by penciling in candidates, updating constraints, and iterating until the board stabilizes, you already understand TRMs. They explicitly separate two pieces of state: a proposal (the current best answer) and a scratchpad (internal reasoning features). Then, in a small loop, they first improve the scratchpad using the input and the current proposal, and next refresh the proposal using the improved scratchpad. Repeat. The same tiny network executes both moves, sharing parameters across all steps.

This may sound quaint in the era of trillion‑parameter models, but on structure‑heavy tasks with limited data—Sudoku, mazes, or ARC‑style pattern induction—these tiny loopers can match or outperform models many times their size. They don’t rely on verbose chain‑of‑thought or heavyweight test‑time sampling; they just do the work internally.

Why recursion helps

Published on The Digital Insider at https://is.gd/Z54zMW.

Comments

Post a Comment

Comments are moderated.