The Sequence Radar: Two Drops, One Direction: The Week Agentic AI Got Practical | By The Digital Insider

DeepSeek v3.1 and Cohere Command A Reasoning push the boundaries of agentic AI.

Next Week in The Sequence:

Our series about interpretability continues with an overview of intrinsic interpretability.

In the AI of the Week installment we will discuss DeepSeek v3.1.

In the opinion section we will look at the inference provider market and the challenges with comoditization.

Subscribe Now to Not Miss Anything:

📝 Editorial: Two Drops, One Direction: The Week Agentic AI Got Practical

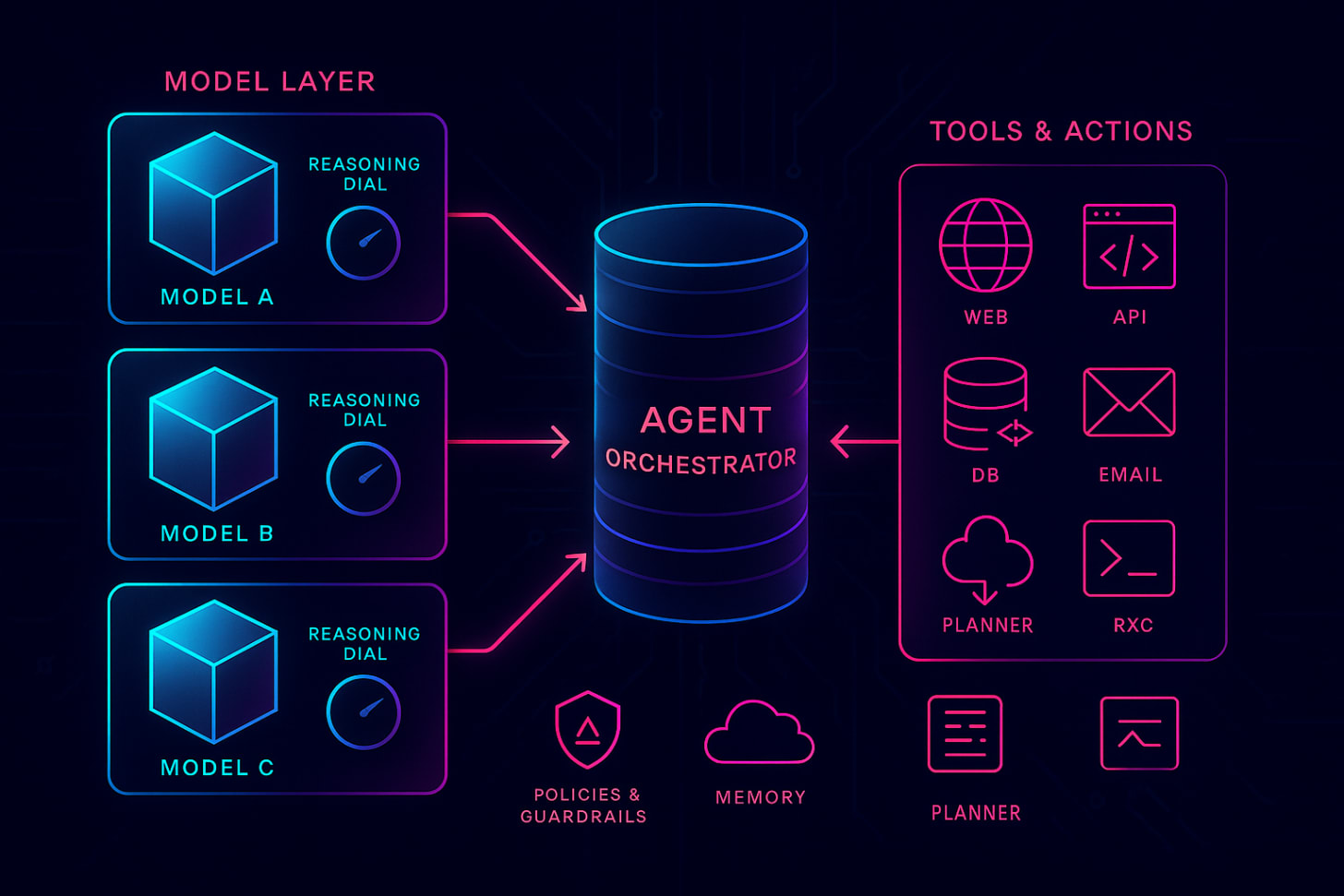

Two standout model releases this week—DeepSeek’s V3.1 and Cohere’s Command A Reasoning—move agentic AI from buzz to build. DeepSeek leans into speed and tool competence; Cohere leans into control and enterprise readiness. The common thread is practical reasoning on tap: you can dial it up when a task is thorny and dial it down when you need answers fast, all without re-architecting your stack.

DeepSeek-V3.1’s headline is a hybrid inference design. The same model can operate in a direct-response mode for quick tasks or a deliberative mode when deeper reasoning helps. That switch is exposed through simple templates and endpoints rather than elaborate scaffolding, so teams can reserve higher “cognition” for the few requests that warrant it. Alongside that, V3.1 tightens tool invocation and multi-step workflows, making it easier to build agents that plan, call functions, and recover from partial failures.

DeepSeek also keeps the door open for builders. The release emphasizes long-context stability, sensible defaults, and open availability of weights, so you can evaluate locally and deploy in varied environments. It’s a cost-performance story as much as a capability story: run it where you have capacity, keep latency predictable, and expand to bigger contexts without the usual brittleness. For startups and research teams, that blend of openness and efficiency lowers the barrier to shipping agentic features.

Cohere’s Command A Reasoning approaches the same destination from an enterprise-first angle. It packages reasoning, tool use, and multilingual competence with the guardrails large organizations expect—strong controls, observability, and predictable throughput. The model’s reasoning behavior can be toggled to trade depth for speed, which slots neatly into patterns like RAG, data analysis, and customer operations where tail latency matters. In short: a reasoning-forward model that still respects production SLOs.

What’s striking is the convergence in developer experience. Both launches treat tool calling and agent workflows as first-class, not afterthoughts; both make the “reasoning dial” explicit so you don’t pay for deep thinking on every request. That reduces orchestration glue, simplifies templates, and makes it feasible to standardize on one API surface while tuning behavior per task. The result is less pipeline complexity and more predictable performance.

Zooming out, this week reads like a milestone for pragmatic reasoning systems. DeepSeek-V3.1 prioritizes openness and flexible deployment; Command A Reasoning prioritizes governance and enterprise control. Different philosophies, same payoff: more capable agents that teams can actually ship. If you’re building now, the playbook is clearer than ever—long context, explicit reasoning modes, robust tool interfaces, and an integration story that serves the product, not the other way around.

🔎 AI Research

Title: Retrieval-Augmented Reasoning with Lean Language Models

AI Lab: The Alan Turing Institute, University of Oxford, University of Cambridge, Imperial College London

Summary: This report introduces a lightweight language model framework that combines retrieval-augmented generation (RAG) with reasoning to handle complex, domain-specific queries in secure or resource-constrained environments. By fine-tuning lean Qwen2.5-Instruct models on synthetic reasoning traces and summarised documents, the system achieves near-frontier performance while remaining deployable locally.

Title: Atom-Searcher: Enhancing Agentic Deep Research via Fine-Grained Atomic Thought Reward

AI Lab: Ant Group

Summary: Atom-Searcher proposes a reinforcement learning framework that decomposes reasoning into "Atomic Thoughts," enabling fine-grained supervision through Reasoning Reward Models (RRMs). This approach resolves gradient conflicts and reward sparsity in outcome-based RL, achieving state-of-the-art performance across seven in-domain and out-of-domain research benchmarks.

Title: Scalable Private Partition Selection via Adaptive Weighting

AI Lab: MIT and Google Research

Summary: This paper introduces MaxAdaptiveDegree (MAD) and MAD2R, new adaptive weighting algorithms for differentially private partition selection that scale to massive datasets. By rerouting excess weight from frequent to rare items while maintaining privacy guarantees, the algorithms provably outperform existing parallel baselines and handle data with hundreds of billions of entries.

Title: MindJourney: Test-Time Scaling with World Models for Spatial Reasoning

AI Lab: UMass Amherst, JHU, HKUST, Microsoft Research, Harvard

Summary: MindJourney couples vision–language models with controllable video diffusion world models, allowing them to explore imagined 3D spaces at test time. Through Spatial Beam Search, the framework boosts spatial reasoning accuracy by over 8% across benchmarks without fine-tuning, surpassing even RL-based test-time scaling methods.

Title: Deep Think with Confidence

AI Lab: Meta AI, UC San Diego

Summary: The paper introduces DeepConf, a lightweight test-time method that leverages token-level confidence signals to filter low-quality reasoning traces, improving both accuracy and efficiency in ensemble reasoning. On challenging benchmarks like AIME 2025, DeepConf achieves up to 99.9% accuracy while cutting token generation by as much as 84.7%, making it a practical solution for scalable reasoning

🤖 AI Tech Releases

DeepSeek v3.1

DeepSeek just released a new model with an impressive performance.

Command A Reasoning

Cohere released Command A reasoning, its new model optimized for enterprise workflows.

📡AI Radar

Anthropic is nearing a deal to raise up to $10B—among the largest AI megarounds—according to Bloomberg.

Agentic platform Manus AI projects a $90M annual revenue run rate, offering the first look at its business scale, per Bloomberg.

Meta and Midjourney partnered for image generation capabilites.

OpenAI will open its first India office in New Delhi to build local partnerships and developer/education programs, following the launch of a $399/month ChatGPT Go plan.

Anthropic is bundling Claude Code into Claude for Enterprise, adding deeper integrations and admin controls for org-wide deployment.

FieldAI raised $405M across multiple rounds to build universal “robot brains” (general-purpose embodied AI with a physics layer) for diverse robots.

Firecrawl raised $14.5M Series A for its open-source AI web crawler, says it’s profitable and still wants to hire “agents” as employees.

Eight Sleep raised $100M to expand its AI-powered sleep platform, including the Pod and a new “Sleep Agent,” after surpassing $500M in Pod sales.

SRE.ai came out of stealth with a $7.2M seed (Salesforce Ventures, Crane) to automate complex DevOps workflows with multi-platform AI agents.

Keychain raised $30M Series B and launched KeychainOS, an AI operating system aiming to replace or augment ERP for CPG manufacturers.

TensorZero raised a $7.3M seed to build open-source, production-grade infrastructure for enterprise LLM apps, amid fast GitHub growth.

Crusoe, an AI data-center infrastructure provider, is in talks to raise at a ~$10B valuation to fund expansion.

#2025, #3D, #Admin, #Agent, #AgenticAI, #Agents, #Ai, #AIAGENTS, #AIPowered, #AlanTuringInstitute, #Algorithms, #Analysis, #Anthropic, #API, #Approach, #Apps, #Art, #Atom, #Atomic, #Barrier, #Beam, #Behavior, #Benchmarks, #Brains, #Building, #Business, #ChatGPT, #Claude, #Code, #Cognition, #Cohere, #College, #Command, #Complexity, #CPG, #Cutting, #Data, #DataAnalysis, #Datasets, #Deal, #DeepResearch, #Deepseek, #DeepSeekV3, #Deployment, #Design, #Developer, #Devops, #Diffusion, #Direction, #Documents, #Editorial, #Education, #Efficiency, #EmbodiedAI, #Employees, #Endpoints, #Enterprise, #Erp, #Features, #Filter, #Framework, #Functions, #Github, #Google, #Governance, #GPT, #Growth, #Hybrid, #ImageGeneration, #India, #Inference, #Infrastructure, #Integration, #Integrations, #Interpretability, #It, #Language, #LanguageModel, #LanguageModels, #Latency, #Learning, #LESS, #Llm, #Manufacturers, #ManusAI, #Meta, #MetaAI, #Method, #Microsoft, #MidJourney, #Milestone, #Mit, #Model, #Models, #Observability, #One, #OpenSourceAI, #Openai, #OperatingSystem, #Operations, #OPINION, #Orchestration, #Organizations, #Other, #PAID, #Paper, #Partition, #Partnerships, #Patterns, #Performance, #Physics, #Plan, #Platform, #Privacy, #Production, #Qwen2, #Radar, #RAG, #Raise, #Reasoning, #Recover, #ReinforcementLearning, #Report, #Research, #Resource, #RetrievalAugmentedReasoning, #Revenue, #Robot, #Robots, #Sales, #Salesforce, #Scalable, #Scale, #Scaling, #Search, #Shipping, #Signals, #Sleep, #Speed, #Stack, #Startups, #Stealth, #Surface, #Teams, #Tech, #Templates, #Test, #TheAlanTuringInstitute, #Thinking, #Time, #Tool, #Trade, #Tuning, #Uc, #University, #UniversityOfCambridge, #Video, #VideoDiffusion, #Vision, #Web, #Work, #Workflows, #World, #WorldModels

Published on The Digital Insider at https://is.gd/cT5x66.

Comments

Post a Comment

Comments are moderated.