The Sequence Research #538: DeepSeek-Prover-V2: Meet the New Addition to the DeepSeek Family | By The Digital Insider

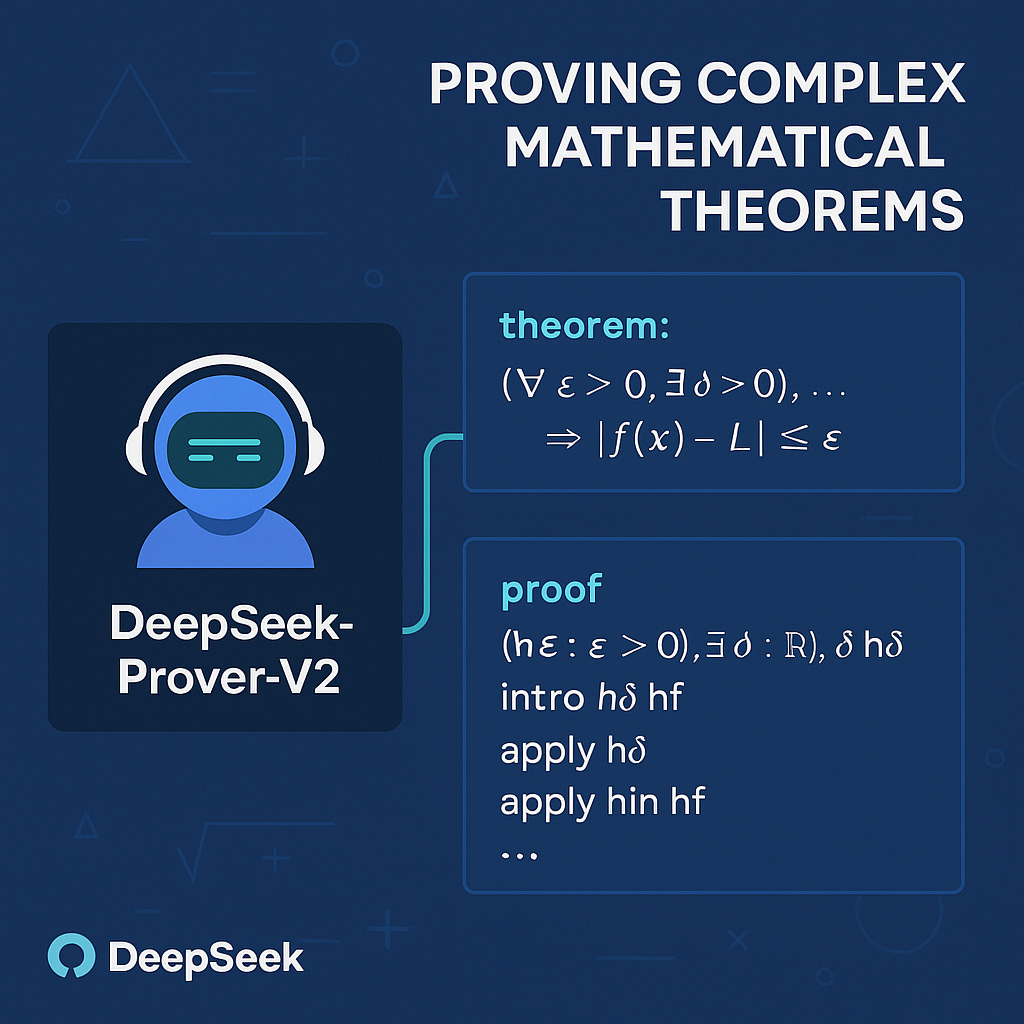

The new model focuses on formal mathematical reasoning.

Theorem proving is one of the cornerstones of mathematics and one that is key is we want AIs to help advance science. DeepSeek’s seems to have taken an interest in this space with its latest model.

DeepSeek-Prover-V2 marks a significant leap in neural theorem proving by tightly integrating informal reasoning with formal proof synthesis. Developed by DeepSeek-AI, this model narrows the gap between intuitive natural language reasoning and the syntactic rigor of formal proof assistants like Lean 4. While most large language models (LLMs) operate in a heuristic, data-driven mode, DeepSeek-Prover-V2 employs a disciplined, structured approach powered by subgoal decomposition and reinforcement learning.

At its core, the system uses DeepSeek-V3 to decompose a complex theorem into intermediate subgoals, expressed in both natural language and formal Lean syntax. These are then resolved by a specialized 7B prover, and the composed solutions are used to train the larger 671B model. This recursive, hybrid reasoning pipeline enables scalable synthesis of formal proofs and establishes new performance records across MiniF2F, ProofNet, PutnamBench, and a newly introduced ProverBench.

2. Architecture and Pipeline

#Ai, #Approach, #Architecture, #Assistants, #Data, #DataDriven, #Deepseek, #DeepSeekV3, #Gap, #GPT, #Hybrid, #Language, #LanguageModels, #LargeLanguageModels, #Learning, #LLMs, #Mathematical, #MathematicalReasoning, #Mathematics, #Model, #Models, #Natural, #NaturalLanguage, #Neural, #One, #Performance, #Reasoning, #ReinforcementLearning, #Research, #Scalable, #Science, #Space, #Syntax, #Synthesis

Published on The Digital Insider at https://is.gd/ldgxvl.

Comments

Post a Comment

Comments are moderated.